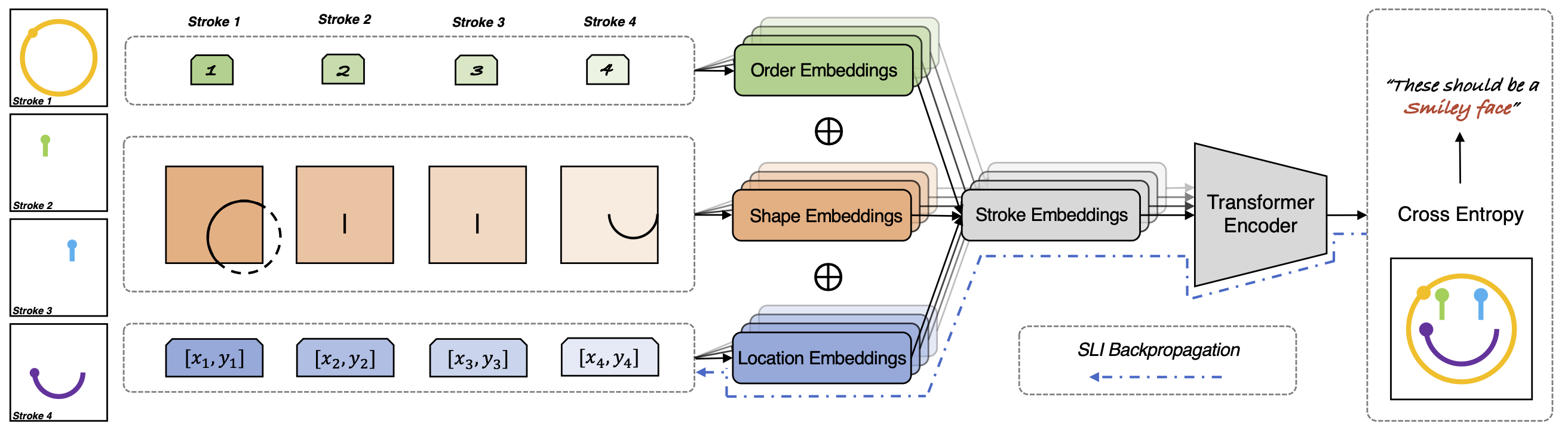

@inproceedings{qu2023sketchxai,

title={SketchXAI: A First Look at Explainability for Human Sketches},

author={Qu, Zhiyu and Gryaditskaya, Yulia and Li, Ke and Pang, Kaiyue and Xiang, Tao and Song, Yi-Zhe},

booktitle={CVPR},

year={2023}

}

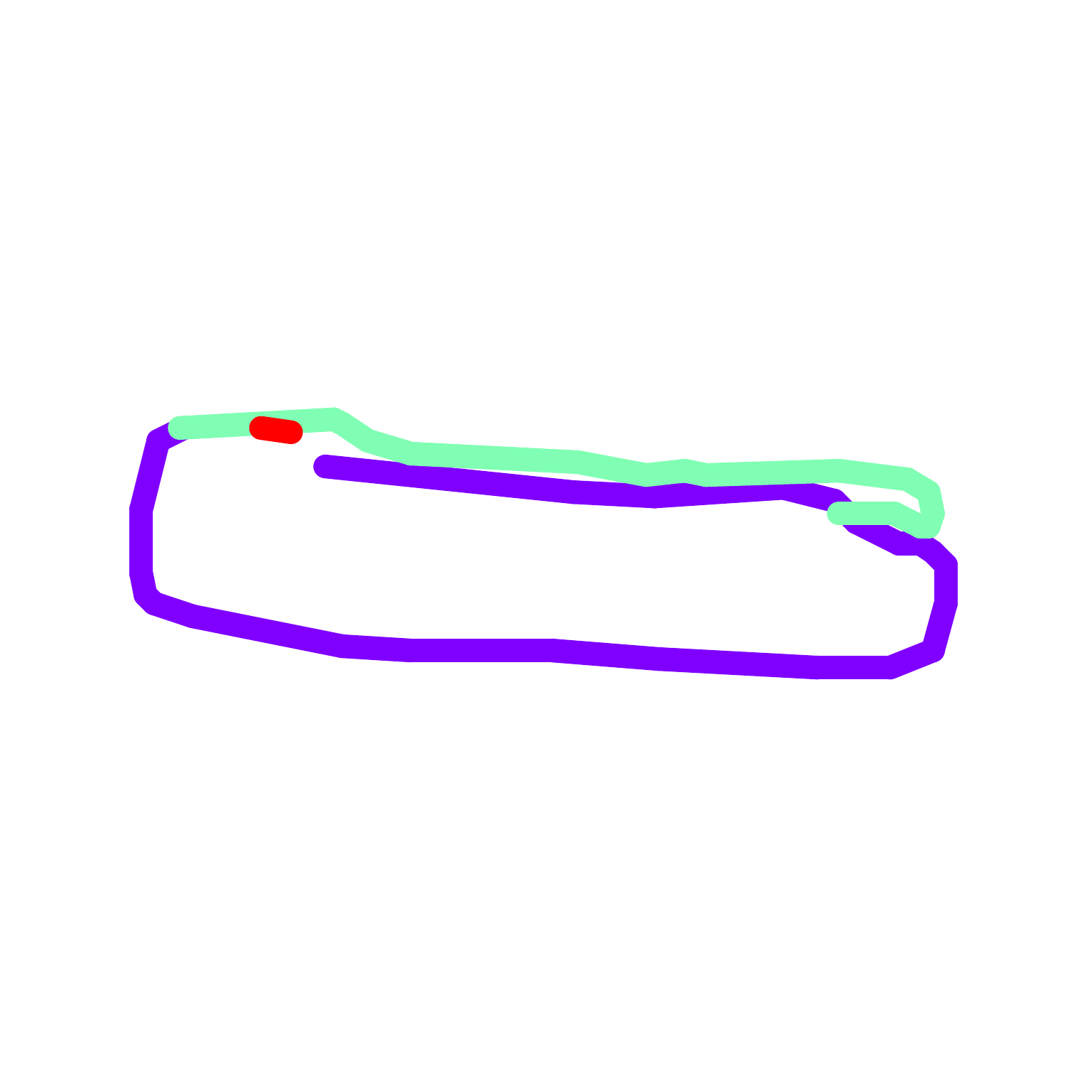

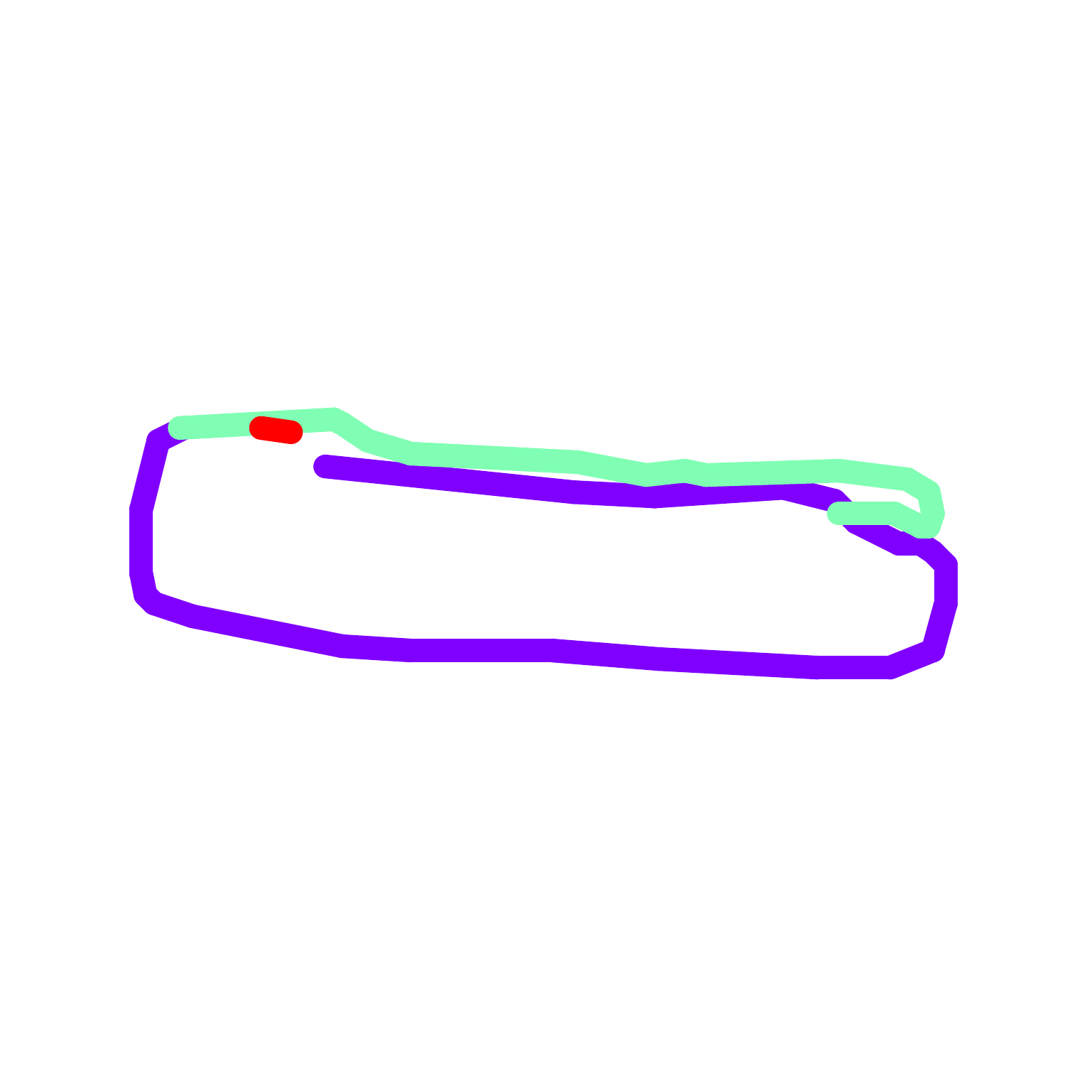

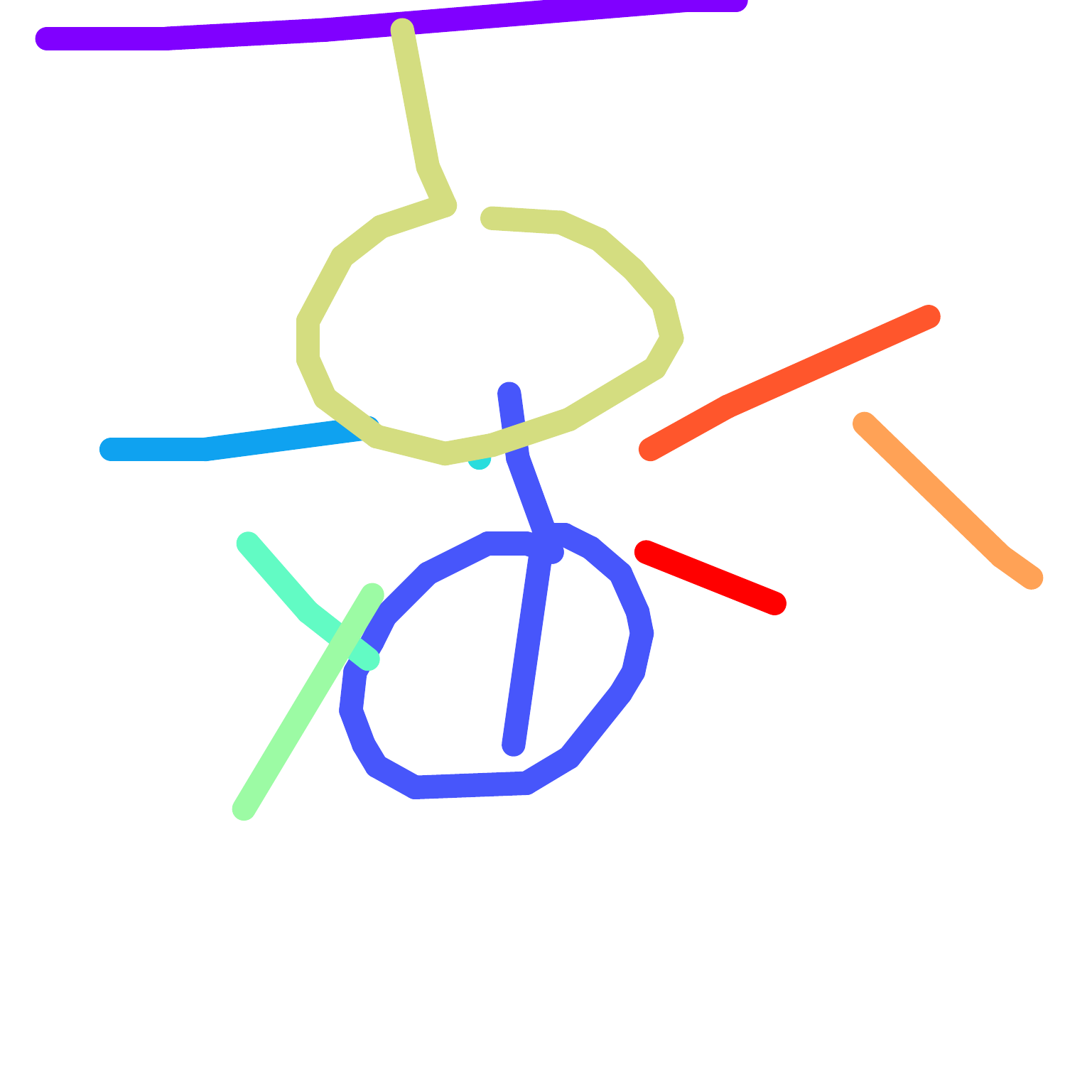

Recovery of SLI

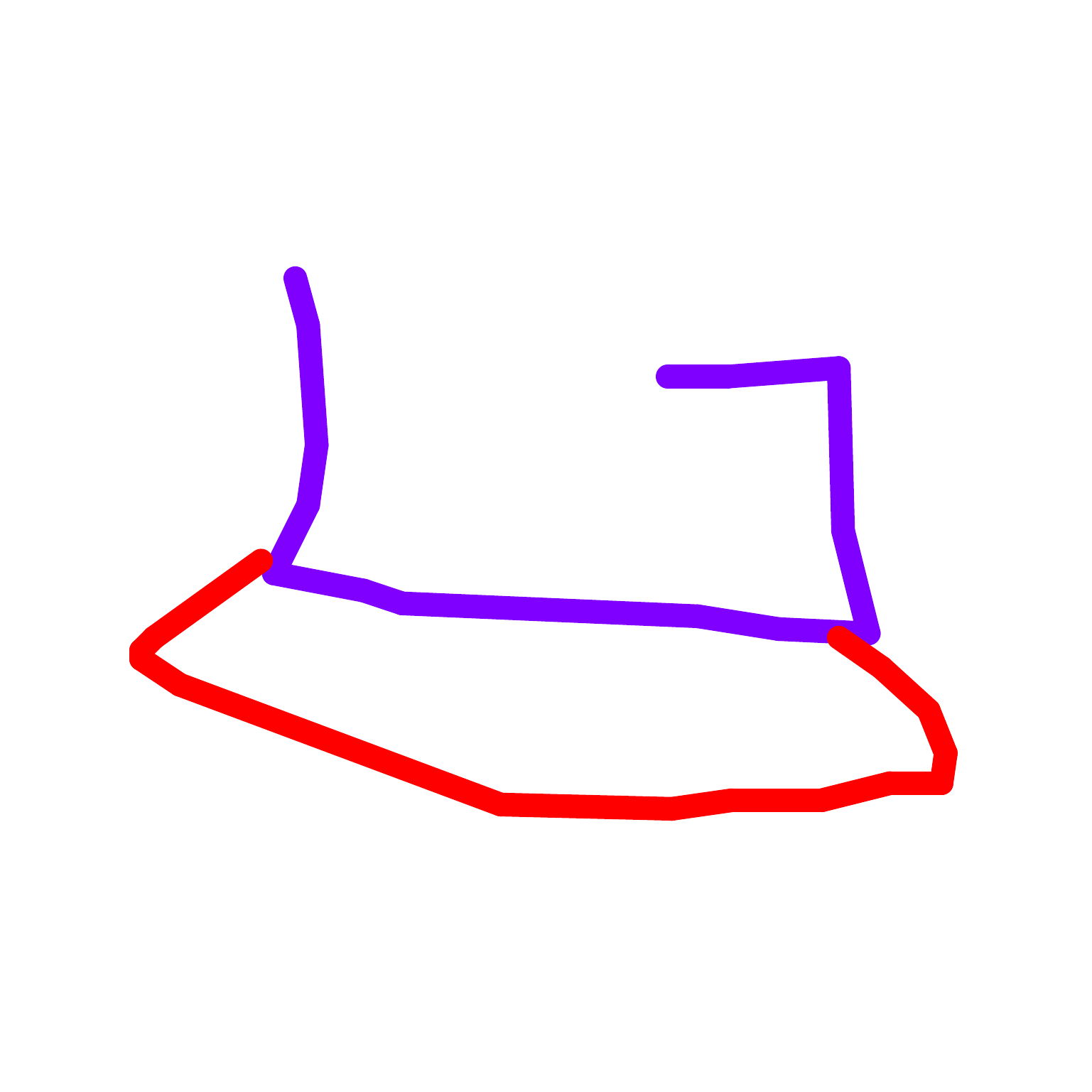

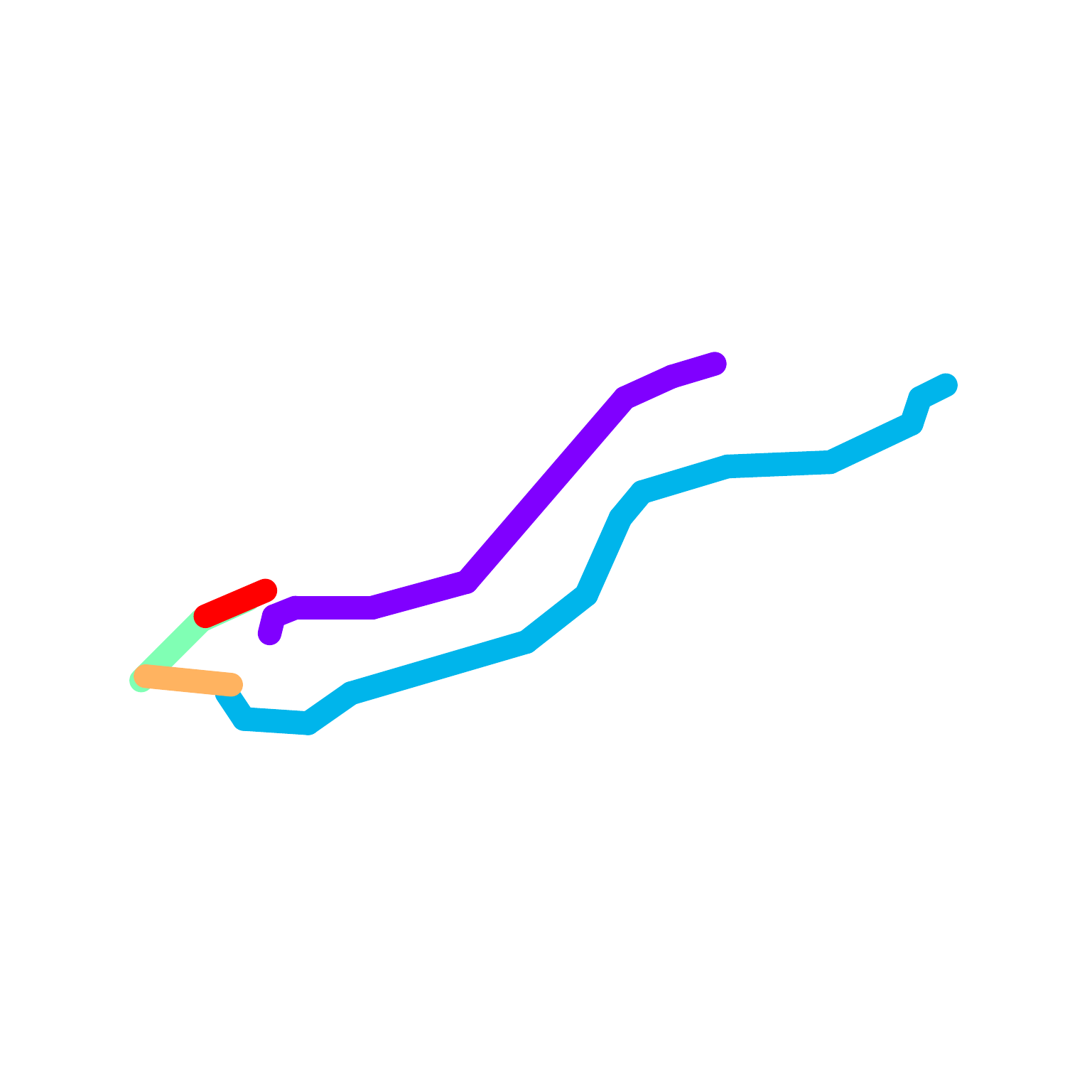

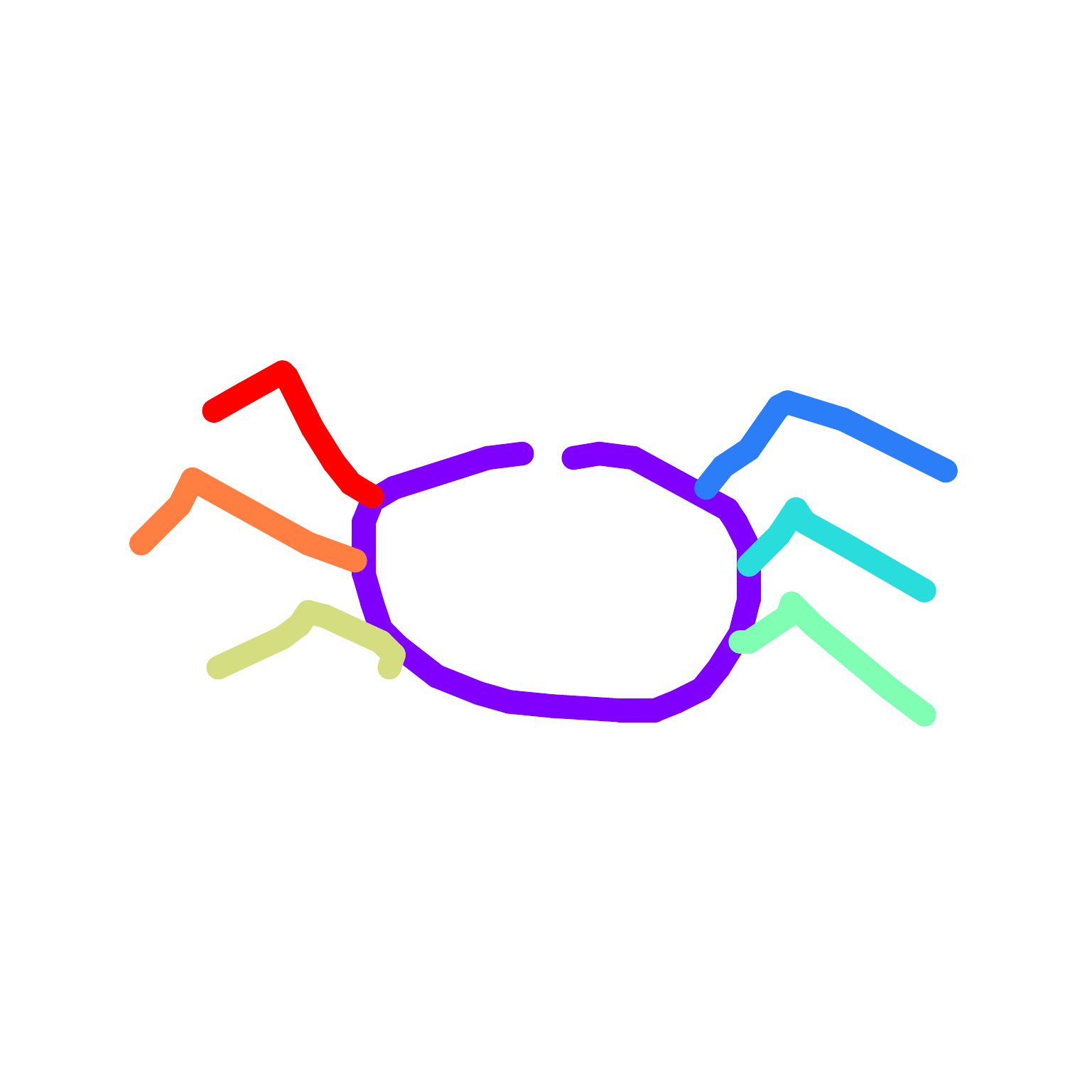

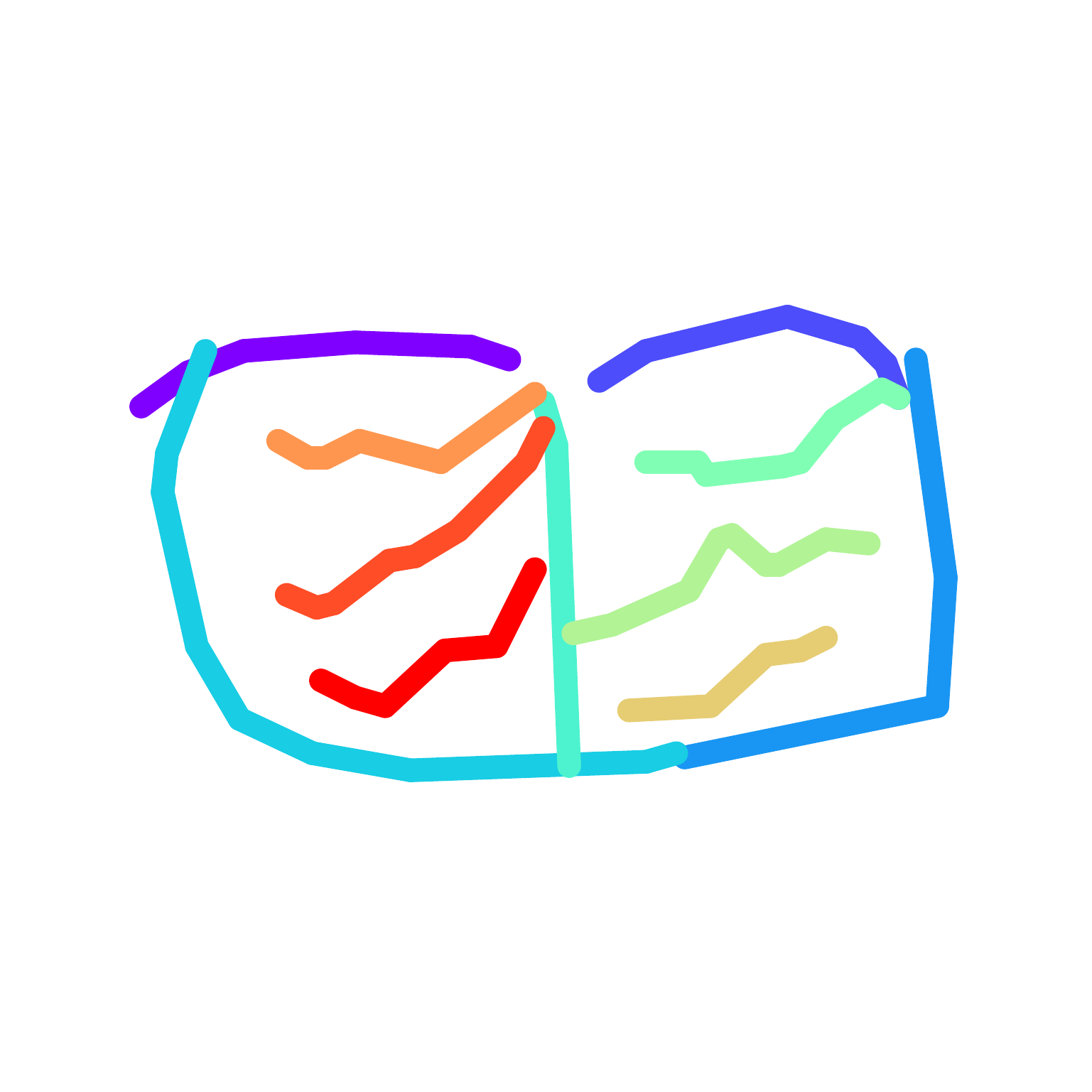

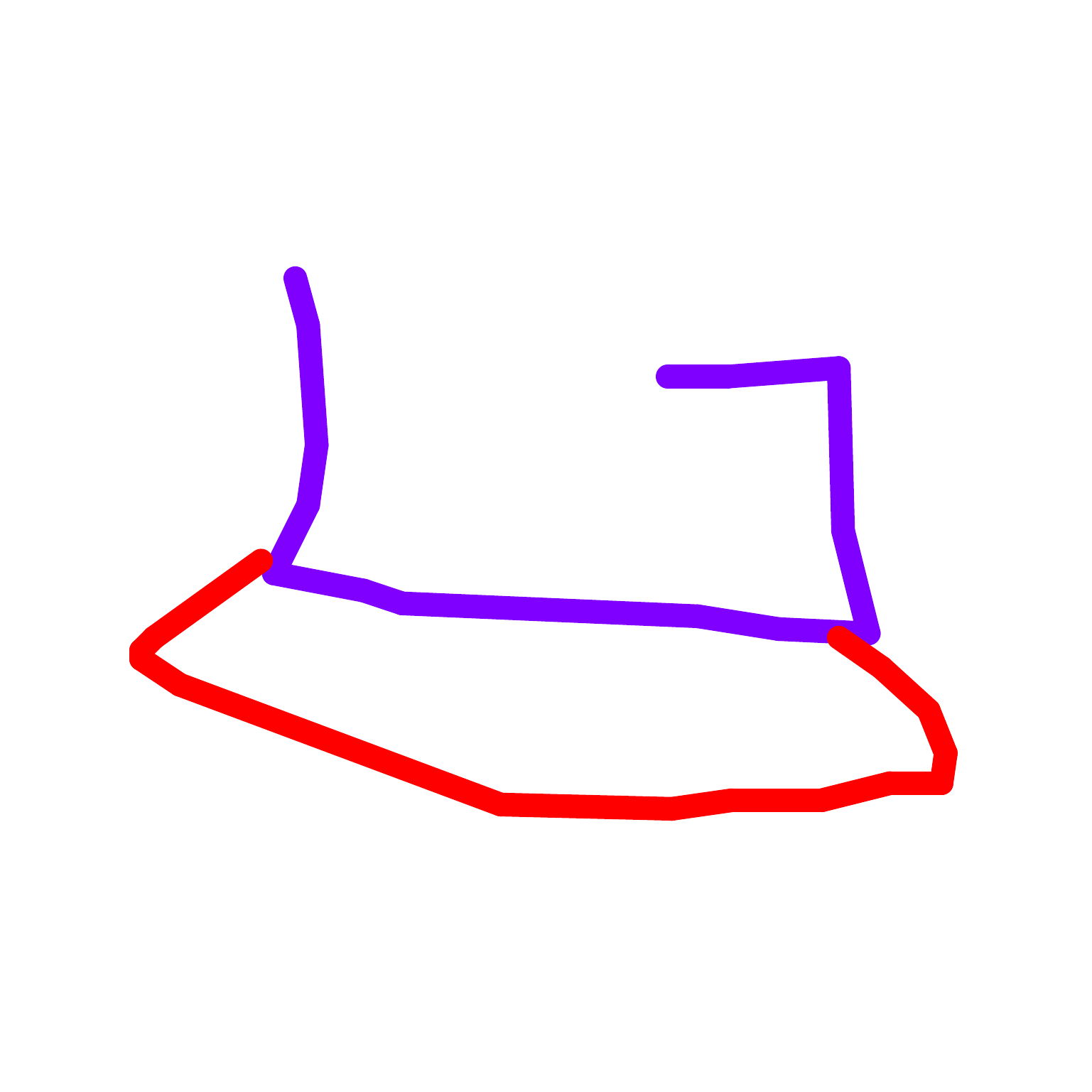

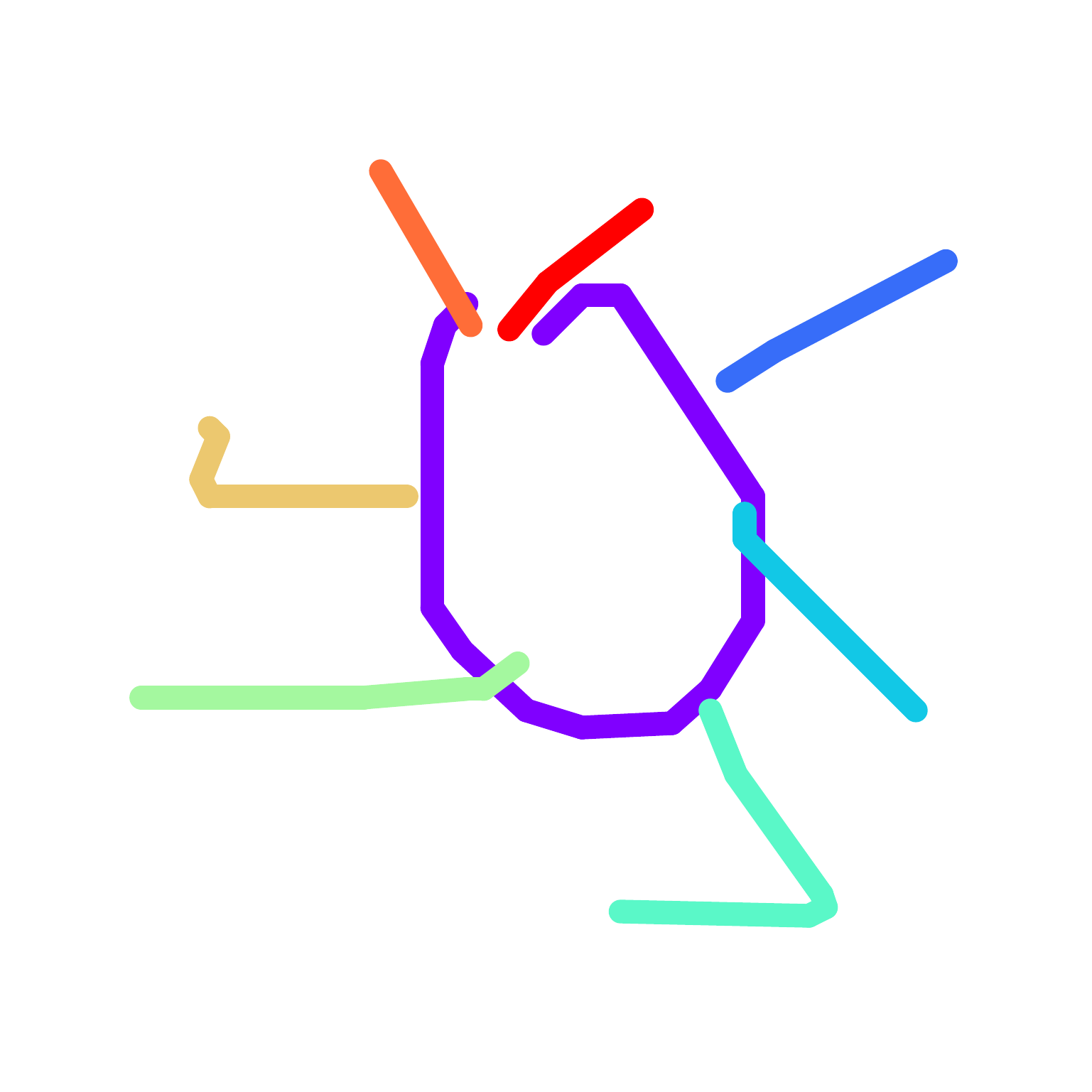

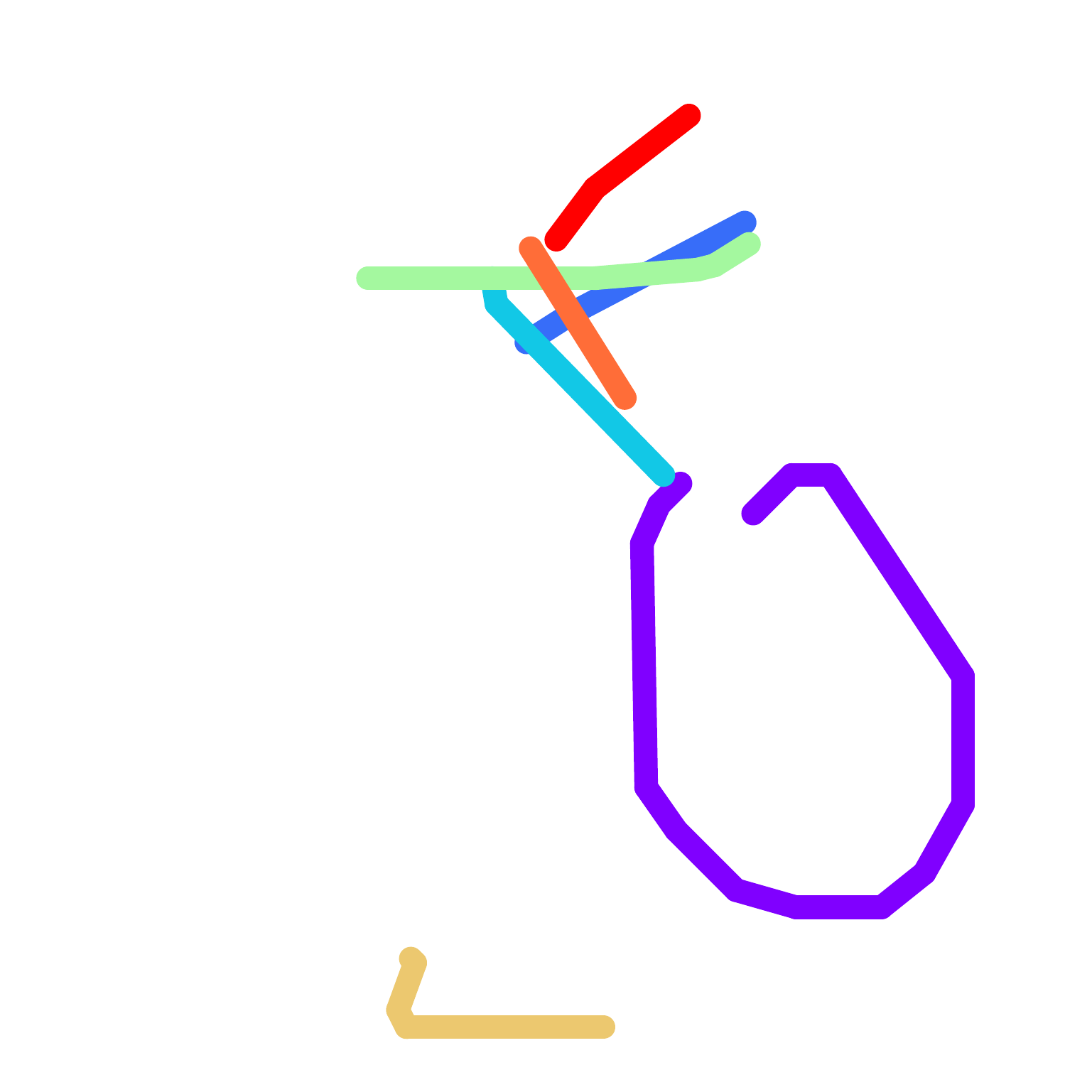

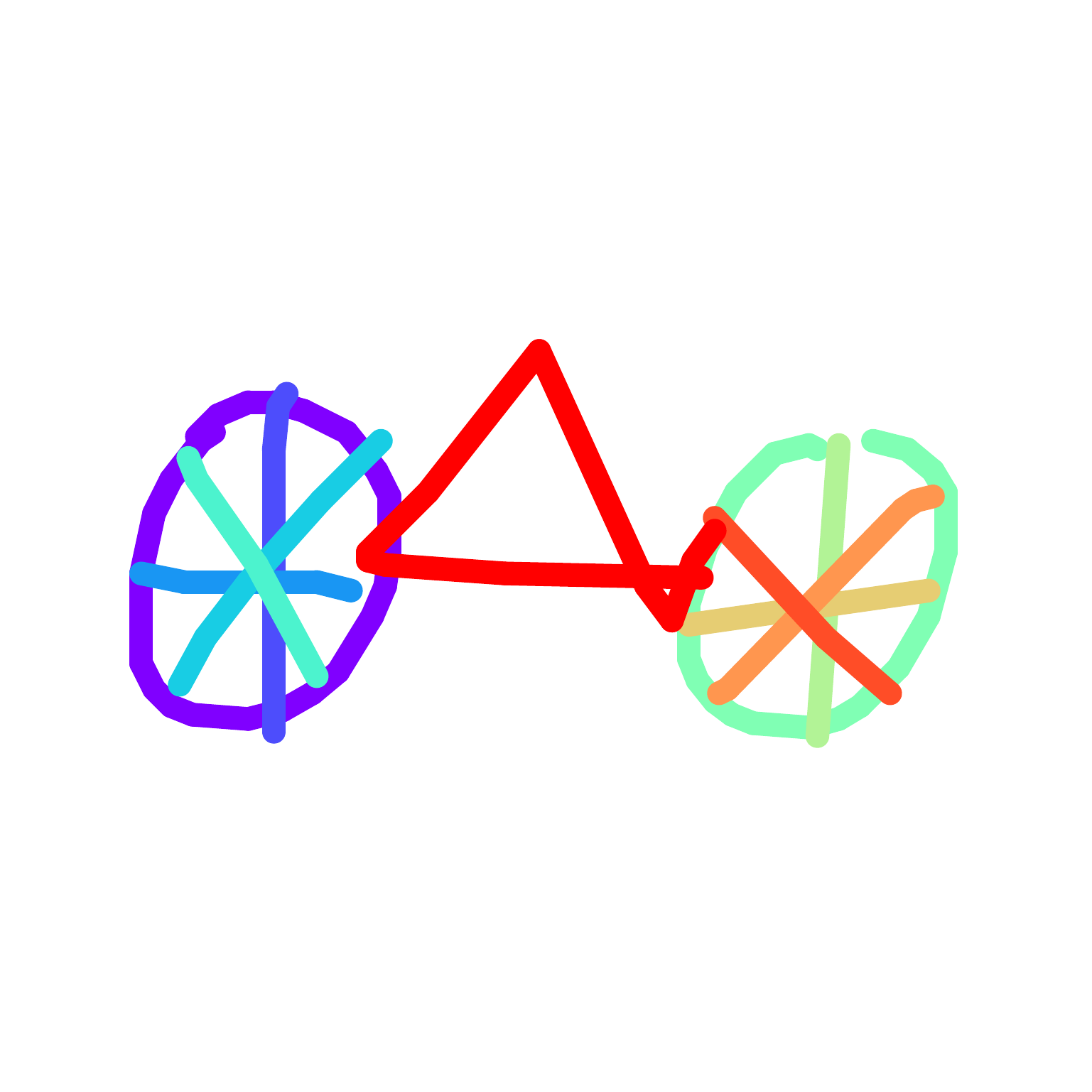

Transfer of SLI

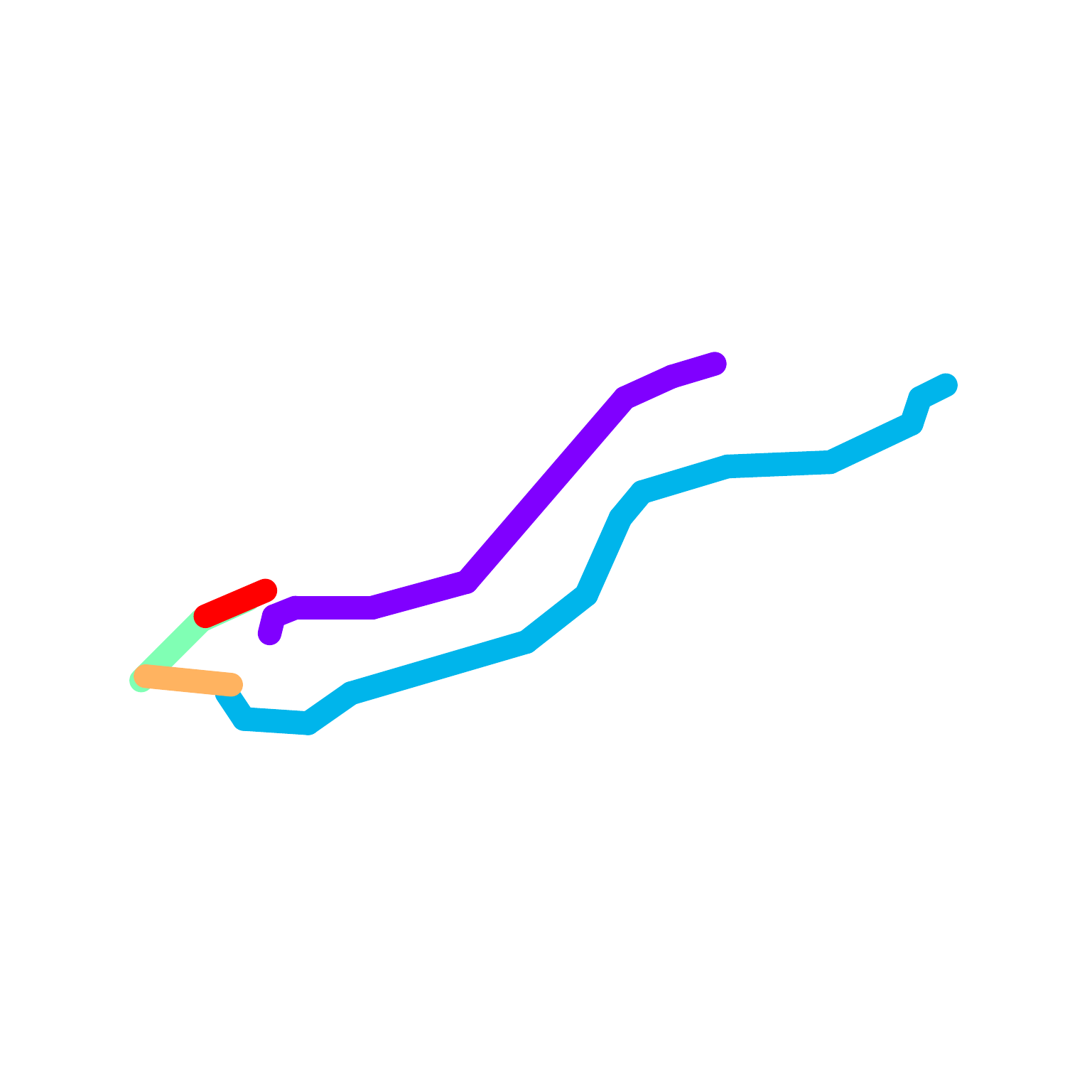

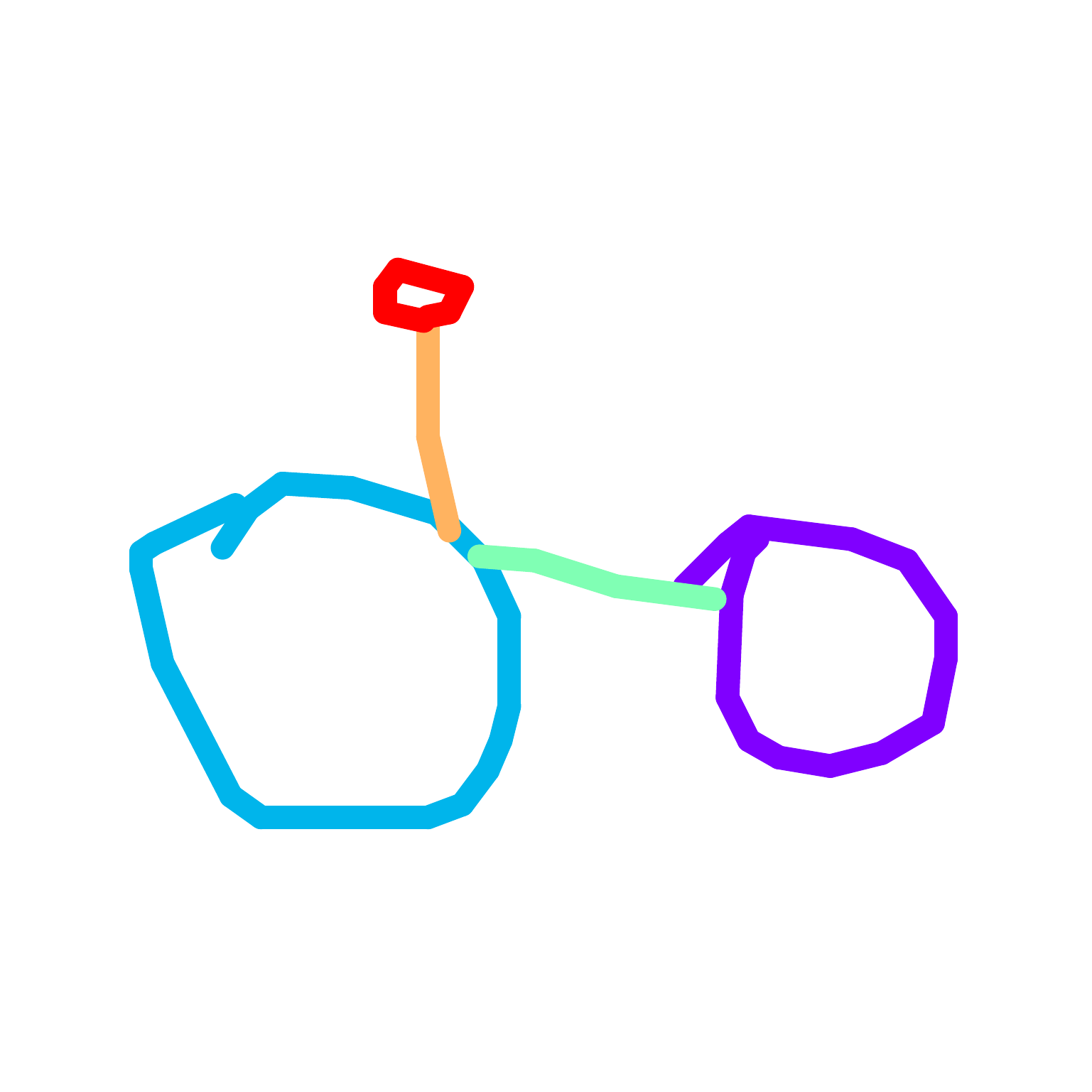

chair -> broom

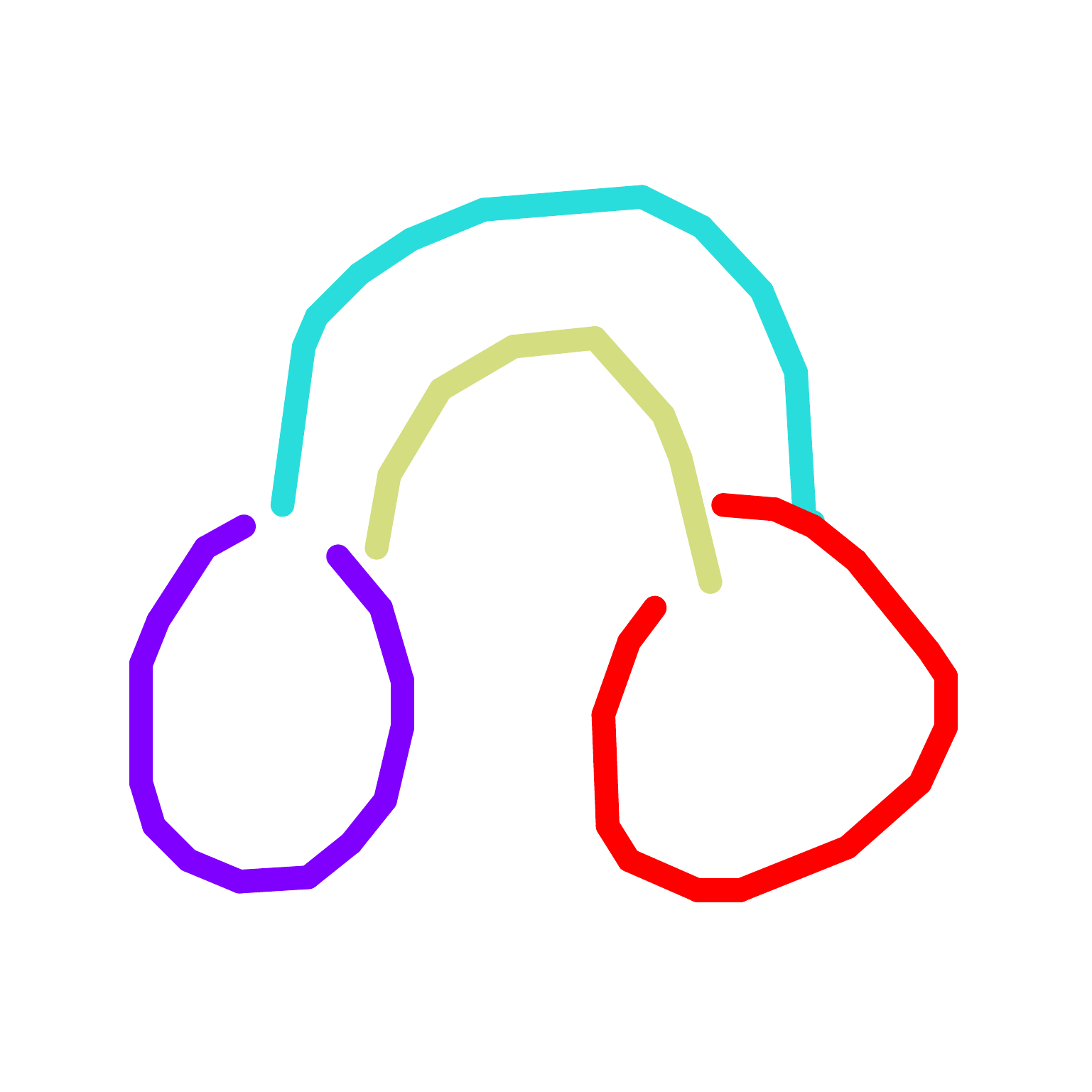

sun -> apple

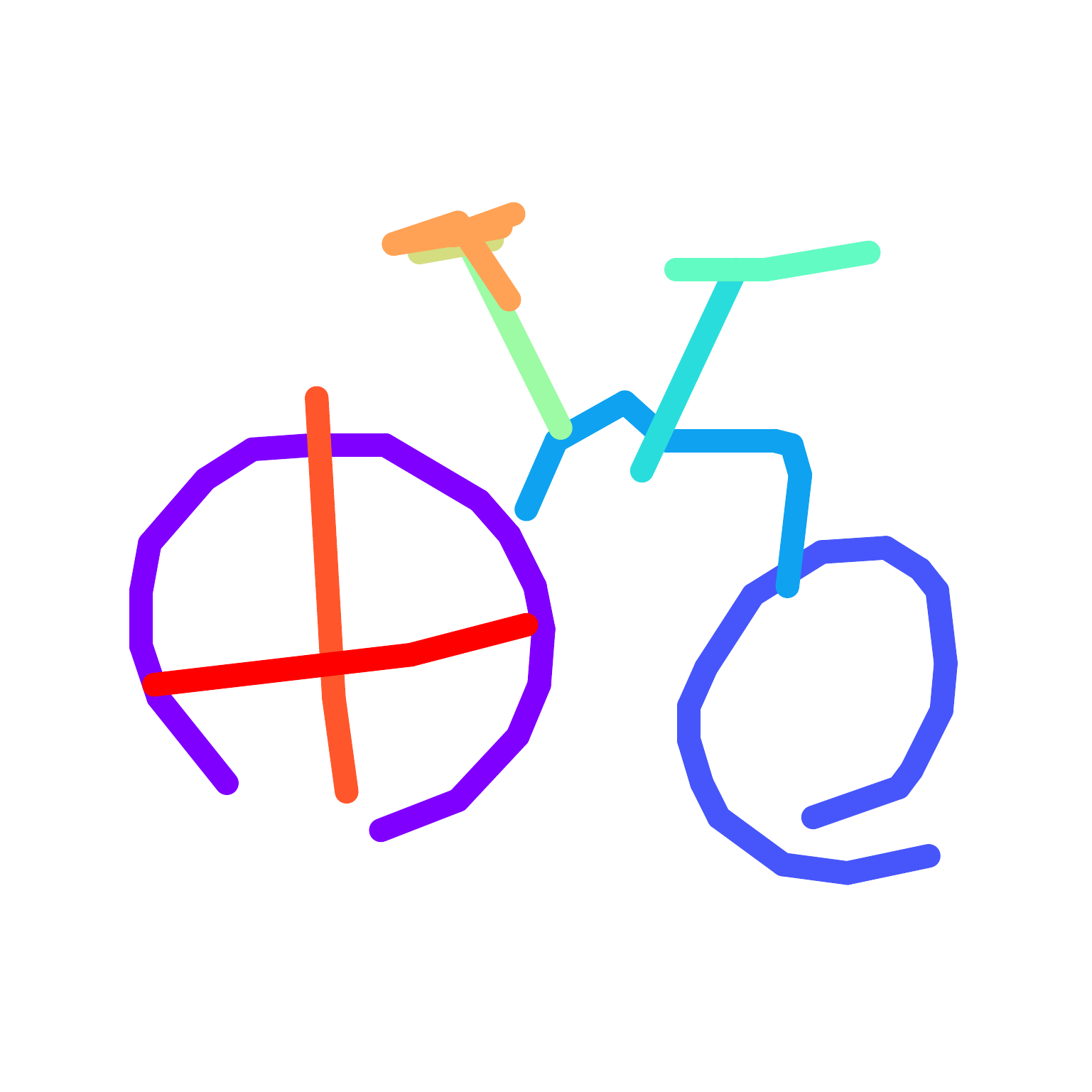

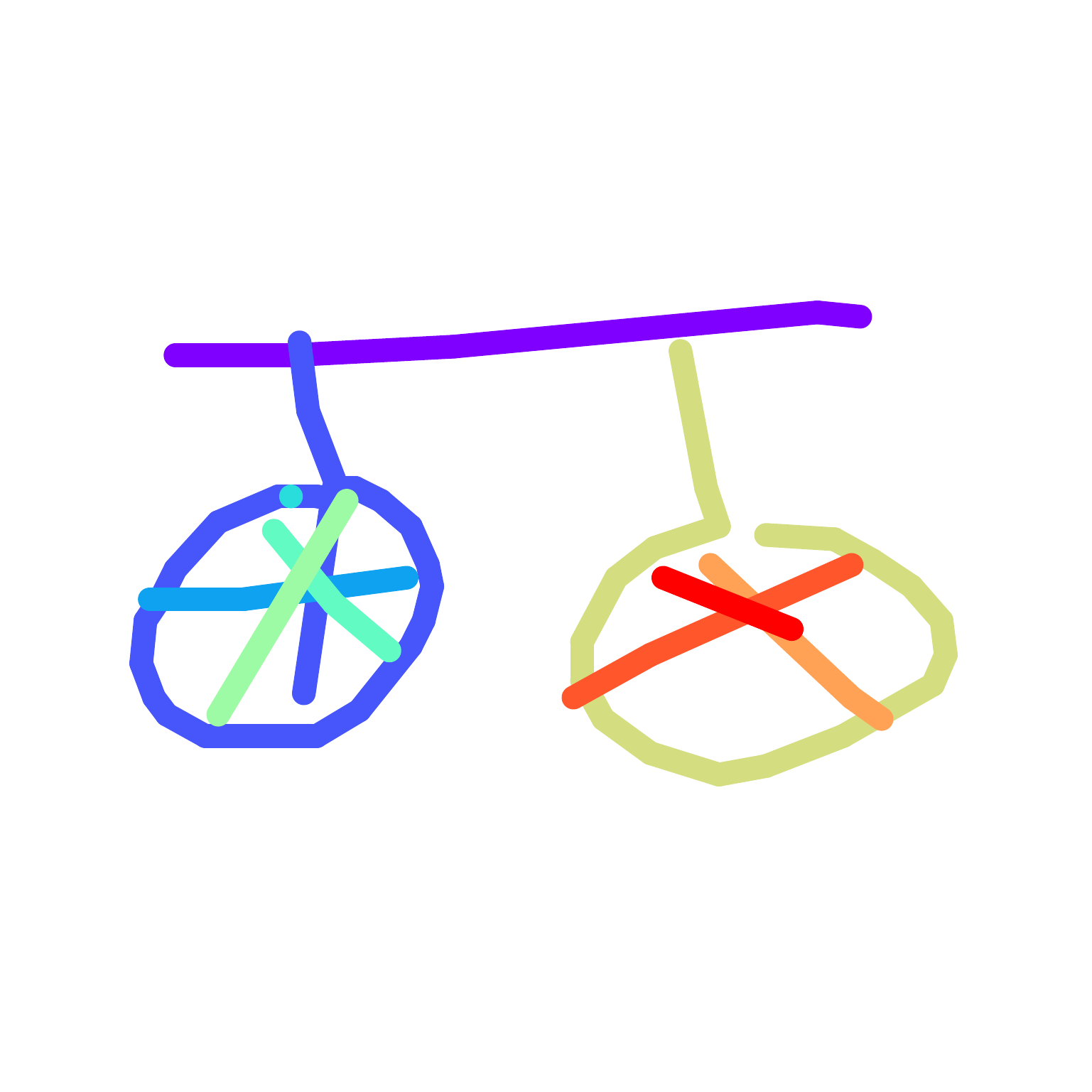

bicycle -> camera

car -> bicycle

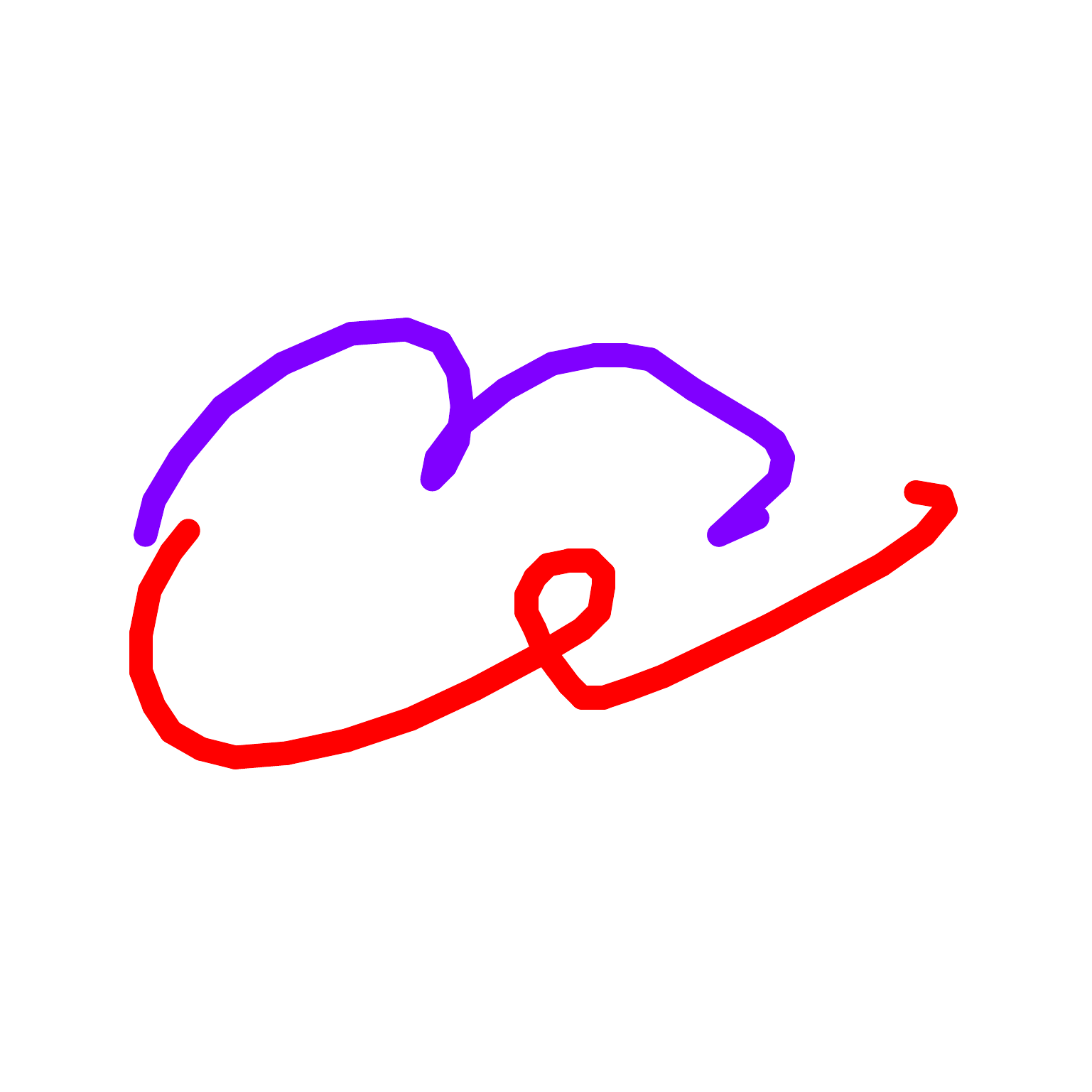

tree -> cloud

airplane -> bed

book -> pants

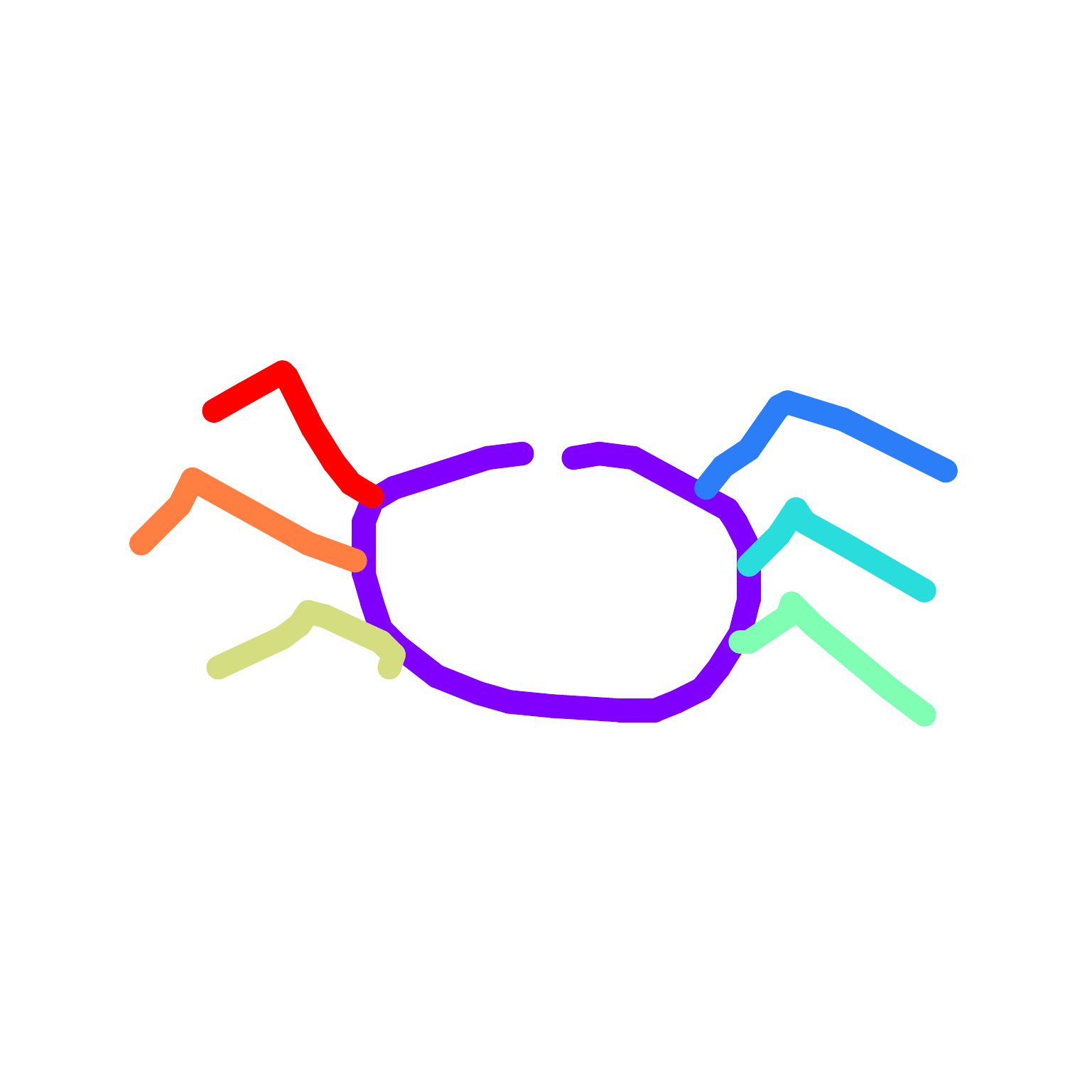

sun -> spider

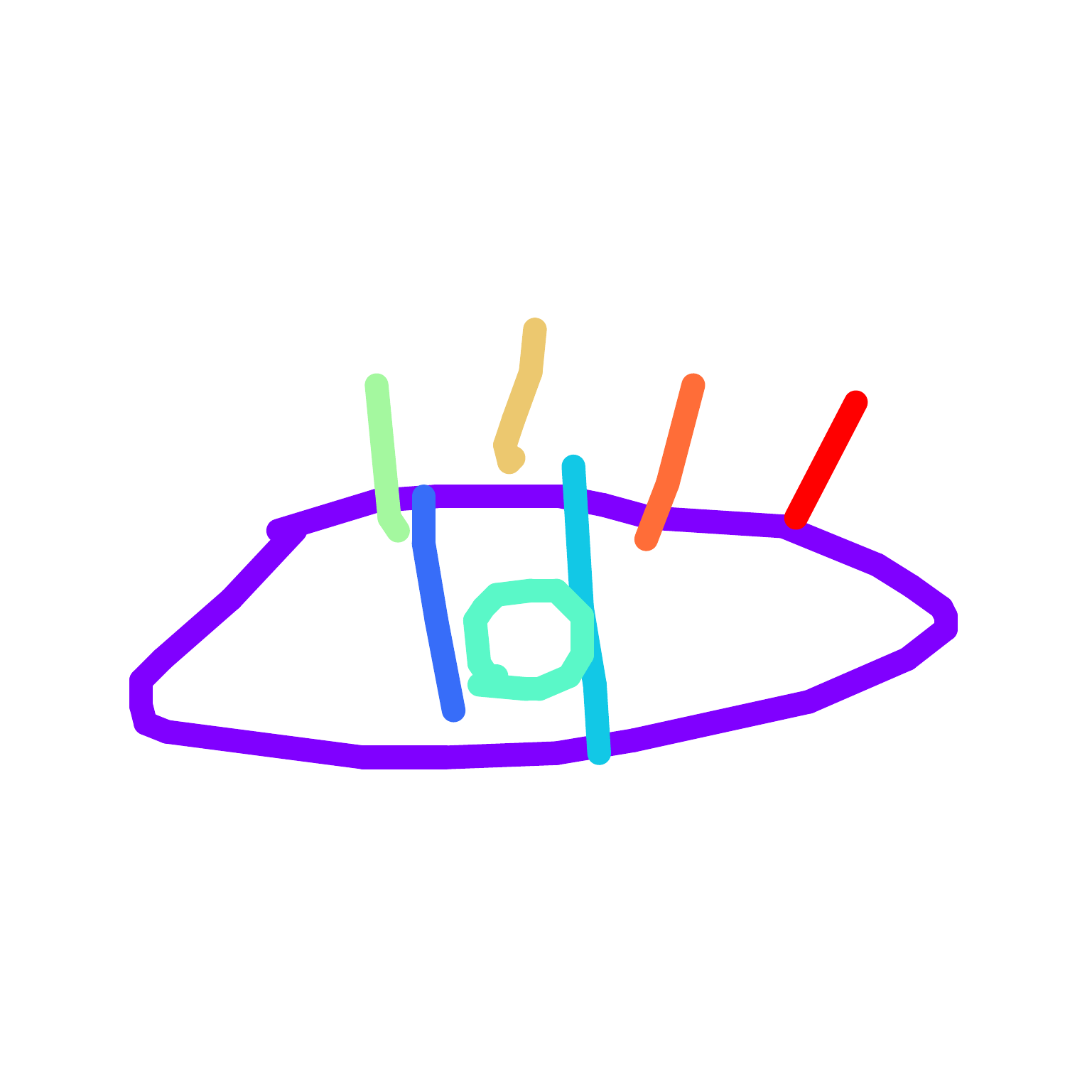

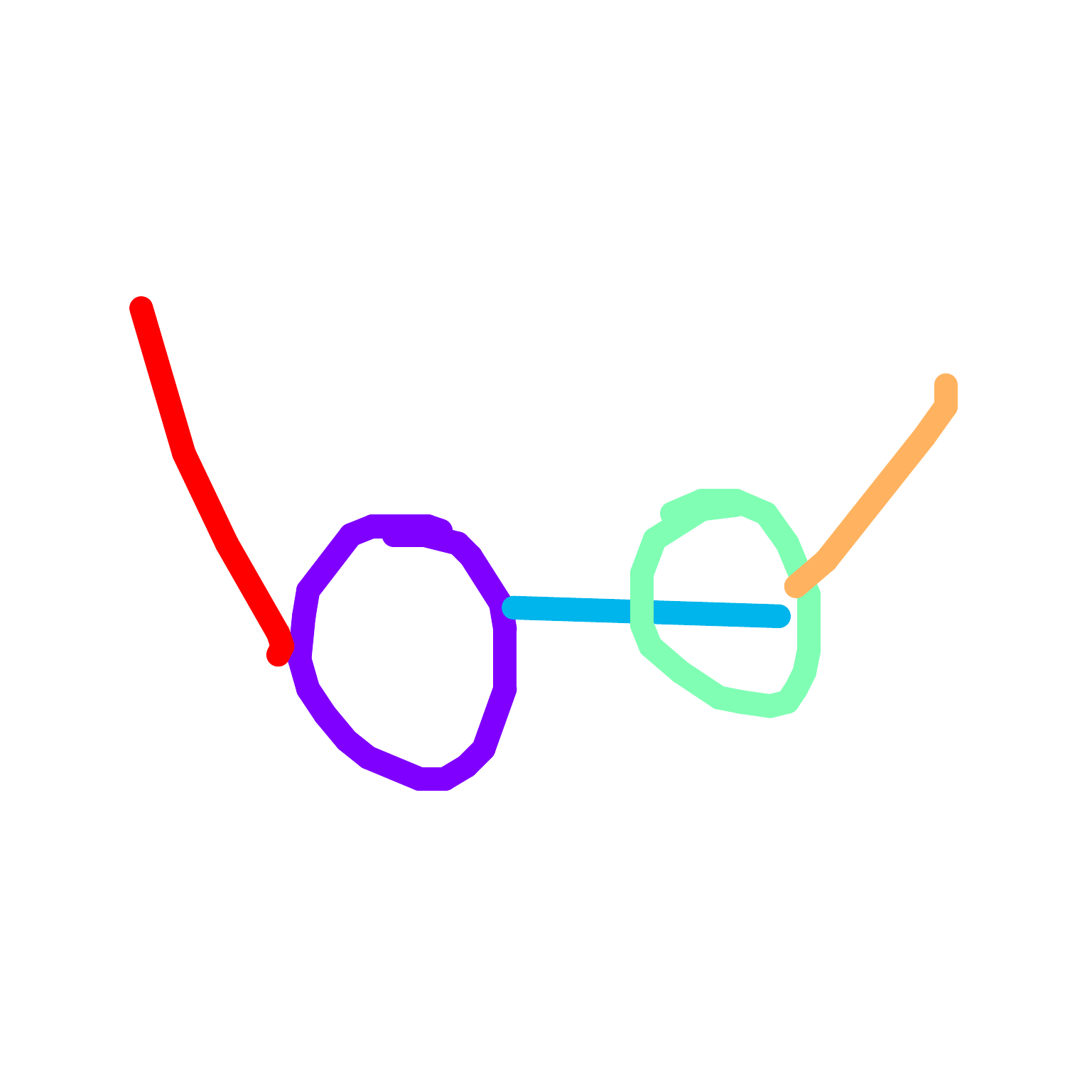

bicycle -> eyeglasses

car -> face

sun -> spider

apple -> clock

sun -> spider

cell_phone -> book

spider -> bicycle

bicycle -> spider

face -> clock

bicycle -> cell_phone

sun -> spider

flower -> cloud